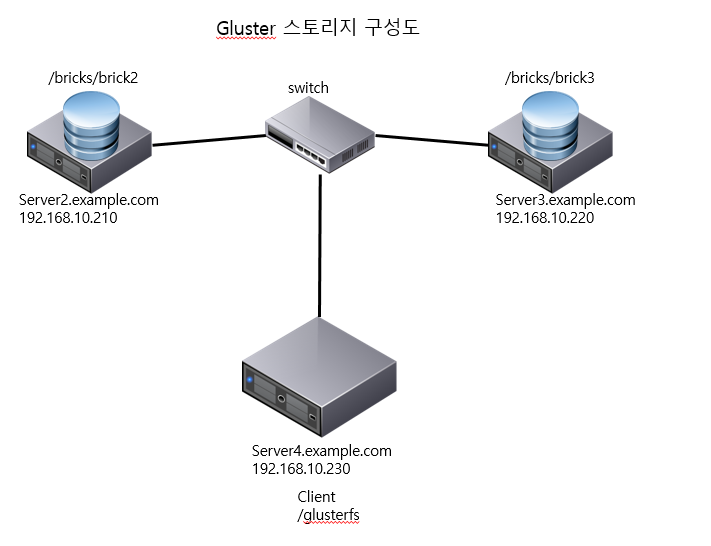

<Gluster Distribute 설정>

분산 저장

server2 설정

[root@server2 ~]# lsblk

....

sdb 8:16 0 1G 0 disk

[root@server2 yum.repos.d]# dnf -y install centos-release-gluster8

[root@server2 yum.repos.d]# dnf repolist

repo id repo name

appstream CentOS Linux 8 - AppStream

baseos CentOS Linux 8 - BaseOS

centos-gluster8 CentOS-8 - Gluster 8

epel Extra Packages for Enterprise Linux 8 - x86_64

epel-modular Extra Packages for Enterprise Linux Modular 8 - x86_64

extras CentOS Linux 8 - Extras

powertools CentOS Linux 8 - PowerTools

[root@server2 yum.repos.d]# dnf config-manager --set-enabled centos-gluster8 //repo gluster8 enable 함

[root@server2 yum.repos.d]# dnf -y install glusterfs-server

[root@server2 yum.repos.d]# systemctl start glusterd

[root@server2 yum.repos.d]# systemctl enable glusterd

[root@server2 yum.repos.d]# systemctl status glusterd

[root@server2 yum.repos.d]# gluster --version

glusterfs 8.3

호스트 등록

[root@server2 yum.repos.d]# vi /etc/hosts

192.168.10.210 server2.example.com

192.168.10.220 server3.example.com

192.168.10.230 server4.example.com

방화벽 해당 포트 해제

[root@server2 yum.repos.d]# firewall-cmd --permanent --zone=public --add-service=glusterfs

[root@server2 yum.repos.d]# firewall-cmd --reload

파티셔닝 작업

[root@server2 /]# fdisk /dev/sdb

[root@server2 /]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sdb 8:16 0 1G 0 disk

└─sdb1 8:17 0 1023M 0 part

[root@server2 /]# mkfs.xfs -i size=512 /dev/sdb1 //gluster는 inode size 512로 하며, xfs를 권고함

[root@server2 /]# lsblk -f /dev/sdb1

[root@server2 /]# vi /etc/fstab

/dev/sdb1 /bricks xfs defaults 0 0

[root@server2 /]# mkdir /bricks

[root@server2 /]# mount /dev/sdb1 /bricks/

[root@server2 /]# df -Th

/dev/sdb1 xfs 1017M 40M 978M 4% /bricks

[root@server2 /]# mkdir -p /bricks/bricks2

[root@server2 /]# gluster peer probe server3.example.com

[root@server2 /]# gluster peer status

Number of Peers: 1

Hostname: server3.example.com

Uuid: 012e9d88-05fe-4eaf-bb7f-44eb35b0221f

State: Peer in Cluster (Connected)

[root@server2 /]# gluster pool list

UUID Hostname State

012e9d88-05fe-4eaf-bb7f-44eb35b0221f server3.example.com Connected

28054617-75f7-498f-9098-0f247042a5ef localhost Connected

[root@server2 /]# gluster volume create dist server2.example.com:/bricks/brick2 server3.example.com:/bricks/brick3

volume create: dist: success: please start the volume to access data

[root@server2 /]# gluster volume list

dist

[root@server2 /]# gluster volume start dist

volume start: dist: success

[root@server2 /]# gluster volume status

Status of volume: dist

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick server2.example.com:/bricks/brick2 49152 0 Y 29179

Brick server3.example.com:/bricks/brick3 49152 0 Y 6742

Task Status of Volume dist

------------------------------------------------------------------------------

There are no active volume tasks

##############################################

######### SERVER 3 설정 ########################

[root@server3 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─cl-root 253:0 0 91.9G 0 lvm /

├─cl-swap 253:1 0 2.1G 0 lvm [SWAP]

└─cl-home 253:2 0 5G 0 lvm /home

sdb 8:16 0 1G 0 disk

[root@server3 ~]# dnf -y install centos-release-gluster8

[root@server3 ~]# dnf config-manager --set-enabled centos-gluster8

[root@server3 ~]# dnf -y install glusterfs-server

[root@server3 ~]# firewall-cmd --permanent --zone=public --add-service=glusterfs

[root@server3 ~]# firewall-cmd --reload

[root@server3 ~]# systemctl start glusterd

[root@server3 ~]# systemctl enable glusterd

[root@server3 ~]# systemctl status glusterd

호스트 등록

[root@server2 yum.repos.d]# vi /etc/hosts

192.168.10.210 server2.example.com

192.168.10.220 server3.example.com

192.168.10.230 server4.example.com

[root@server3 ~]# fdisk /dev/sdb

[root@server3 ~]# lsblk -f /dev/sdb

NAME FSTYPE LABEL UUID MOUNTPOINT

sdb

└─sdb1

[root@server3 ~]# mkfs.xfs -i size=512 /dev/sdb1

[root@server3 ~]# lsblk -f /dev/sdb1

[root@server3 ~]# vi /etc/fstab

/dev/sdb1 /bricks xfs defaults 0 0

[root@server3 ~]# mkdir /bricks

[root@server3 ~]# mount -a

[root@server3 ~]# mkdir /bricks/bricks3

[root@server3 ~]# df -Th

/dev/sdb1 xfs 1017M 40M 978M 4% /bricks

#######################################

###### SERVER 4 CLIENT 설정 ###############

[root@server4 ~]# dnf -y install glusterfs-fuse

[root@server4 ~]# mkdir /glusterfs

[root@server4 ~]# mount.glusterfs server2.example.com:/dist /glusterfs

[root@server4 ~]# vi /etc/fstab

server2.example.com:/dist /glusterfs glusterfs defaults 0 0

[root@server4 ~]# df -Th

....

server2.example.com:/dist fuse.glusterfs 2.0G 100M 1.9G 5% /glusterfs

#######################################

########## test #######################

server4

[root@server4 ~]# touch /glusterfs/file{1..5}

[root@server4 ~]# ls -l /glusterfs/

total 0

-rw-r--r--. 1 root root 0 Jan 6 12:03 file1

-rw-r--r--. 1 root root 0 Jan 6 12:03 file2

-rw-r--r--. 1 root root 0 Jan 6 12:03 file3

-rw-r--r--. 1 root root 0 Jan 6 12:03 file4

-rw-r--r--. 1 root root 0 Jan 6 12:03 file5

server2

[root@server2 /]# ls -l /bricks/brick2/

total 0

-rw-r--r--. 2 root root 0 Jan 6 12:03 file3

-rw-r--r--. 2 root root 0 Jan 6 12:03 file4

server3

[root@server3 bricks]# ls -l /bricks/brick3/

total 0

-rw-r--r--. 2 root root 0 Jan 6 12:03 file1

-rw-r--r--. 2 root root 0 Jan 6 12:03 file2

-rw-r--r--. 2 root root 0 Jan 6 12:03 file5

-------------------------------------------------------

기존 gluster volume 삭제 후 재사용 시 문제점 및 해결

-------------------------------------------------------

[root@server4 ~]# umount /dist

[root@server2 /]# gluster volume stop vol_dist

Stopping volume will make its data inaccessible. Do you want to continue? (y/n) y

volume stop: vol_dist: success

[root@server2 /]# gluster volume delete vol_dist

Deleting volume will erase all information about the volume. Do you want to continue? (y/n) y

volume delete: vol_dist: success

[root@server2 /]# gluster peer detach server4.example.com

All clients mounted through the peer which is getting detached need to be remounted using one of the other active peers in the trusted storage pool to ensure client gets notification on any changes done on the gluster configuration and if the same has been done do you want to proceed? (y/n) y

peer detach: success

기존 디렉토리 재생성이 안될것

[root@server2 /]# gluster volume create vol_distribute server2.example.com:/bricks/brick2 server3.example.com:/bricks/brick3

volume create: vol_distribute: failed: /bricks/brick2 is already part of a volume

아래처럼 속성 제거 필요

[root@server2 /]# setfattr -x trusted.glusterfs.volume-id /bricks/brick2

[root@server2 /]# setfattr -x trusted.gfid /bricks/brick2

[root@server2 /]# rm -rf /bricks/brick2/.glusterfs

재설치 됨

[root@server2 /]# gluster volume create dist server2.example.com:/bricks/brick2 server3.example.com:/bricks/brick3

volume create: dist: success: please start the volume to access data

###########################################################

################ Replicated 설정 ###############################

###########################################################

파일 복제

server2 에서..

[root@server2 ~]# mkfs.xfs -i size=512 /dev/sdb1

[root@server2 ~]# mkdir -p /bricks/brick2

[root@server2 ~]# mount /dev/sdb1 /bricks

[root@server2 ~]# gluster peer probe server3.example.com

peer probe: success

replicated 사용시 arbiter 나 replica 3개를 사용 권장함

[root@server2 ~]# gluster volume create replication replica 2 server2.example.com:/bricks/brick2 server3.example.com:/bricks/brick3 Replica 2 volumes are prone to split-brain. Use Arbiter or Replica 3 to avoid this. See: http://docs.gluster.org/en/latest/Administrator%20Guide/Split%20brain%20and%20ways%20to%20deal%20with%20it/.

Do you still want to continue?

(y/n) y

volume create: replication: success: please start the volume to access data

[root@server2 ~]# gluster peer status

[root@server2 ~]# gluster volume start replication

volume start: replication: success

[root@server2 ~]# gluster volume status

Status of volume: replication

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick server2.example.com:/bricks/brick2 49152 0 Y 10266

Brick server3.example.com:/bricks/brick3 49152 0 Y 8350

Self-heal Daemon on localhost N/A N/A Y 10283

Self-heal Daemon on server3.example.com N/A N/A Y 8367

Task Status of Volume replication

------------------------------------------------------------------------------

There are no active volume tasks

[root@server2 ~]#

server 3에서...

[root@server3 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 99G 0 part

├─cl-root 253:0 0 91.9G 0 lvm /

├─cl-swap 253:1 0 2.1G 0 lvm [SWAP]

└─cl-home 253:2 0 5G 0 lvm /home

sdb 8:16 0 1G 0 disk

└─sdb1 8:17 0 1023M 0 part

[root@server3 ~]# mkdir /glusterfs

[root@server3 ~]# mkfs.xfs -i size=512 /dev/sdb1

[root@server3 ~]# mkdir -p /bricks/brick3

[root@server3 ~]# mount /dev/sdb1 /bricks

server4에서...

[root@server4 ~]# mount.glusterfs server2.example.com:/replication /glusterfs

[root@server4 ~]# df -Th

server2.example.com:/replication fuse.glusterfs 1017M 50M 968M 5% /glusterfs

---------------------------

test 하기

----------------------------

[root@server4 ~]# touch /glusterfs/file{10..15}

[root@server4 ~]# ls -l /glusterfs/

total 0

-rw-r--r--. 1 root root 0 Jan 6 15:15 file10

-rw-r--r--. 1 root root 0 Jan 6 15:15 file11

-rw-r--r--. 1 root root 0 Jan 6 15:15 file12

-rw-r--r--. 1 root root 0 Jan 6 15:15 file13

-rw-r--r--. 1 root root 0 Jan 6 15:15 file14

-rw-r--r--. 1 root root 0 Jan 6 15:15 file15

[root@server4 ~]#

[root@server2 ~]# ls -l /bricks/brick2/

total 0

-rw-r--r--. 2 root root 0 Jan 6 15:15 file10

-rw-r--r--. 2 root root 0 Jan 6 15:15 file11

-rw-r--r--. 2 root root 0 Jan 6 15:15 file12

-rw-r--r--. 2 root root 0 Jan 6 15:15 file13

-rw-r--r--. 2 root root 0 Jan 6 15:15 file14

-rw-r--r--. 2 root root 0 Jan 6 15:15 file15

[root@server2 ~]# ^C

[root@server3 ~]# ls -l /bricks/brick3/

total 0

-rw-r--r--. 2 root root 0 Jan 6 15:15 file10

-rw-r--r--. 2 root root 0 Jan 6 15:15 file11

-rw-r--r--. 2 root root 0 Jan 6 15:15 file12

-rw-r--r--. 2 root root 0 Jan 6 15:15 file13

-rw-r--r--. 2 root root 0 Jan 6 15:15 file14

-rw-r--r--. 2 root root 0 Jan 6 15:15 file15

[root@server3 ~]#

###############################################

############ Dispersed 설정 ###################

##############################################

파일을 용량 단위로 분산하여 저장

server2에서...

[root@server2 ~]# mkdir /bricks/brick2; mkdir /bricks/brick12; mkdir /bricks/brick22

실제사용 브릭스 4개 와 장애처리 위한 브릭수 2개

[root@server2 ~]# gluster volume status

No volumes present

[root@server2 ~]# gluster volume create disperse_vol disperse-data 4 redundancy 2 \

> server2.example.com:/bricks/brick2 \

> server2.example.com:/bricks/brick12 \

> server2.example.com:/bricks/brick22 \

> server3.example.com:/bricks/brick3 \

> server3.example.com:/bricks/brick13 \

> server3.example.com:/bricks/brick23 \

> force

volume create: disperse_vol: success: please start the volume to access data

[root@server2 ~]# gluster volume start disperse_vol

volume start: disperse_vol: success

[root@server2 ~]# gluster volume status

Status of volume: disperse_vol

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick server2.example.com:/bricks/brick2 49152 0 Y 19643

Brick server2.example.com:/bricks/brick12 49153 0 Y 19659

Brick server2.example.com:/bricks/brick22 49154 0 Y 19675

Brick server3.example.com:/bricks/brick3 49152 0 Y 15288

Brick server3.example.com:/bricks/brick13 49153 0 Y 15304

Brick server3.example.com:/bricks/brick23 49154 0 Y 15323

Self-heal Daemon on localhost N/A N/A Y 19698

Self-heal Daemon on server3.example.com N/A N/A Y 15341

Task Status of Volume disperse_vol

------------------------------------------------------------------------------

There are no active volume tasks

server 3에서..

[root@server3 ~]# mkdir /bricks/brick3; mkdir /bricks/brick13; mkdir /bricks/brick23

server 4에서....

[root@server4 ~]# mount.glusterfs server2.example.com:/disperse_vol /glusterfs/

test 하기... // 각각 파일이 용량만큼 나눠서 적용됨 (4개 실사용 512M 나옴)

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file10 bs=1M count=512

[root@server4 ~]# ls -lh /glusterfs/

total 512M

-rw-r--r--. 1 root root 512M Jan 6 15:58 file10

[root@server2 ~]# ls -lh /bricks/brick2

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

[root@server2 ~]# ls -lh /bricks/brick12

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

[root@server2 ~]# ls -lh /bricks/brick22

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

[root@server2 ~]#

[root@server3 ~]# ls -lh /bricks/brick3

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

[root@server3 ~]# ls -lh /bricks/brick13

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

[root@server3 ~]# ls -lh /bricks/brick23

total 129M

-rw-r--r--. 2 root root 128M Jan 6 15:58 file10

#######################################################

############ Distributed-Replicated 설정 ###################

#######################################################

분산 후 복제

server 2에서...

[root@server2 ~]# gluster volume create dist_replica replica 2 \

> server2.example.com:/bricks/brick2 \

> server2.example.com:/bricks/brick12 \

> server3.example.com:/bricks/brick3 \

> server3.example.com:/bricks/brick13 \

> force

volume create: dist_replica: success: please start the volume to access data

[root@server2 ~]#

[root@server2 ~]# gluster vol status

Volume dist_replica is not started

[root@server2 ~]# gluster vol start dist_replica

volume start: dist_replica: success

[root@server2 ~]#

[root@server2 ~]# gluster vol status

Status of volume: dist_replica

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick server2.example.com:/bricks/brick2 49152 0 Y 24273

Brick server2.example.com:/bricks/brick12 49153 0 Y 24289

Brick server3.example.com:/bricks/brick3 49152 0 Y 21544

Brick server3.example.com:/bricks/brick13 49153 0 Y 21560

Self-heal Daemon on localhost N/A N/A Y 24312

Self-heal Daemon on server3.example.com N/A N/A Y 21577

Task Status of Volume dist_replica

------------------------------------------------------------------------------

There are no active volume tasks

server 3에서.....

없음

server 4에서...

[root@server4 ~]# mount.glusterfs server2.example.com:/dist_replica /glusterfs

[root@server4 ~]# df -Th

.....

server2.example.com:/dist_replica fuse.glusterfs 1017M 51M 967M 5% /glusterfs

test 하기....

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file1 bs=1M count=10

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file2 bs=1M count=10

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file3 bs=1M count=10

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file4 bs=1M count=10

[root@server4 ~]# dd if=/dev/zero of=/glusterfs/file5 bs=1M count=10

[root@server4 ~]# ls -l /glusterfs/

total 51200

-rw-r--r--. 1 root root 10485760 Jan 6 16:36 file1

-rw-r--r--. 1 root root 10485760 Jan 6 16:36 file2

-rw-r--r--. 1 root root 10485760 Jan 6 16:36 file3

-rw-r--r--. 1 root root 10485760 Jan 6 16:36 file4

-rw-r--r--. 1 root root 10485760 Jan 6 16:36 file5

[root@server2 ~]# ls -l /bricks/brick2

total 20480

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file3

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file4

[root@server2 ~]# ls -l /bricks/brick12

total 20480

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file3

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file4

[root@server3 ~]# ls -l /bricks/brick3

total 30720

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file1

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file2

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file5

[root@server3 ~]# ls -l /bricks/brick13

total 30720

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file1

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file2

-rw-r--r--. 2 root root 10485760 Jan 6 16:36 file5

'linux' 카테고리의 다른 글

| Centos - HAproxy + nginx (0) | 2021.01.07 |

|---|---|

| centos teaming - lacp (0) | 2021.01.06 |

| centos 8 - pacemaker + iscsi + gfs2 shared storage 이중화 (0) | 2021.01.05 |

| centos 8 - drbd 설치 (0) | 2021.01.04 |

| centos 8 - pacemaker (0) | 2021.01.03 |